In early June, Euranova’s Chief Technology Officer, Sabri Skhiri, attended the IAPP AI Governance Global 2024 conference in Brussels, a seminal event designed to facilitate dialogue and collaboration among business leaders, technology professionals, and legal experts involved in AI development and implementation. In this article, Sabri will delve into some of the keynotes, panels and talks that took place during the summit, highlighting the noteworthy insights and innovations shared by industry leaders.

Keynotes

Shannon Vallor on Overcoming Bias and Unlocking Potential

The keynote was a thought-provoking talk by Shannon Vallor, launching her new book “The AI Mirror.” It offered a fresh perspective on AI, highlighting both the potential pitfalls and the exciting possibilities.

Vallor argues that current AI systems are like distorting mirrors, reflecting back our flaws and limitations. We’ve built AI on a foundation of our past data, essentially creating a technology that reinforces the status quo and bias. This “mirror” shows us where we’ve been, not where we can go, repeating the bias and thoughts from the past.

Vallor argues that current AI systems are like distorting mirrors, reflecting back our flaws and limitations. We’ve built AI on a foundation of our past data, essentially creating a technology that reinforces the status quo and bias. This “mirror” shows us where we’ve been, not where we can go, repeating the bias and thoughts from the past.

But Vallor isn’t buying into the dystopian view of AI. Instead, she envisions it as a powerful tool for human progress. AI can be the “guardrail” we need to overcome our limitations and reach our full potential. By leveraging AI’s analytical capabilities, we can free ourselves to focus on creativity, problem-solving, and building a better future.

The keynote challenged me to see AI not as a threat, but as a potential partner in human flourishing. It’s all about using this technology responsibly and with a clear vision for the future we want to create. If you’re interested in navigating the complexities of AI and harnessing its power for good, at the edge with philosophical questions, I recommend checking out Vallor’s book.

Google's Matt Brittin on Seizing the AI Opportunity

Matt Brittin, Google’s Chief Business Officer, delivered a keynote address emphasising Google’s commitment to harnessing technology for good. He argued that the most significant risk organisations face today is missing the current opportunity presented by AI.

A History of Timely Innovation:

Brittin drew parallels between the transformative impact of the internet and the potential of AI. Just as the web opened vast resources in 1997, and mobile internet revolutionized access in 2007, AI stands for widespread deployment. He highlighted the ubiquity of mobile technology, with 77% of the world owning a mobile phone and 66% having internet access. This creates a fertile ground for AI adoption, similar to the mobile revolution.

Building on a Decade of AI Experience:

Google’s extensive experience in AI development was a central theme. Brittin cited advancements like YouTube video indexing with AI, the TensorFlow framework, AlphaGo’s victory over a human champion, and the development of foundational AI principles in 2016. He emphasised that AI is not new; what’s new is the potential for its large-scale deployment.

AI for a Better World: Boldness, Responsibility, and Collaboration:

The message of the keynote was clear: we must be bold, responsible, and collaborative when harnessing AI’s power. Brittin showcased concrete examples of AI achievements with positive societal impact. He highlighted the use of AI for protein folding, a breakthrough with over 1.8 million researchers utilising the data and 20,000 citations generated. Additionally, the Greenlight project was presented, demonstrating how AI optimises traffic signals with Google Maps data to reduce CO2 emissions.

Unlocking Economic Growth with Everyday AI:

Brittin emphasised the economic potential of AI. He claimed that AI could add 1.2 trillion EUR to the global GDP. However, this requires widespread adoption and integration of AI tools within the global economy.

Responsible AI Governance: Public Trust and Safety:

Brittin acknowledged the public’s concerns about AI governance. He highlighted a survey indicating that 59% of Europeans believe AI will benefit society, while 77% believe collaboration between governments and tech companies is vital for safe AI development. Google’s commitment to responsible AI development was emphasised, with a focus on security, privacy, and safety by default. He mentioned specialised centres in Malaga (security), Munich (privacy), and Dublin (content safety), demonstrating Google’s global approach to responsible AI.

Security and Privacy at the Forefront:

The importance of cybersecurity and user privacy in the AI landscape was addressed. Brittin highlighted the launch of an AI cybersecurity centre in Munich, boasting a 70% improvement in malware detection rates with AI. Additionally, he mentioned Gemini 1.5 Pro’s ability to process an entire malware codebase in 30 seconds, though the source of this information needs further verification.

Building a Better Future with AI:

Brittin concluded by reiterating Google’s commitment to responsible AI principles. He emphasized the potential of AI to address societal challenges, contribute positively to the economy, and boost productivity. The core message was clear: Google aims to provide users with the tools they need, while ensuring the safety they deserve in this new era of AI.

Favourite Panels

AI in Defense: Balancing Power with Responsibility

The speakers were Nikos Loutas, heading data and AI policy at NATO, and Nancy Morgan, a former US National Security executive with experience in AI governance.

The big topic? AI in defence and security. It’s clear AI is becoming a game-changer on the battlefield, but the conversation quickly shifted to the ethical implications. We can’t ignore the potential for harm if this tech falls into the wrong hands.

The panellists tackled the challenges and opportunities of deploying AI responsibly. They dug into how AI might transform the way wars are fought in the future, which sounds both exciting and a little terrifying.

The bottom line? We need to find a way to balance innovation with responsible use. International cooperation, especially with industry and research institutions, seems to be key, although they are not submitted to the AI act.

Overall, this session showed the opportunities and challenges to implement AI governance and responsible AI in defence, although this domain does not fall within the AI Act scope.

Building AI Governance at Scale

I attended an inspiring panel discussion on AI Governance, featuring experts from Bosch, Capgemini, Google, and Roche. The discussion centred on the challenges and opportunities of implementing global AI governance programs.

Challenges of Global AI Governance:

Operationalising Principles: Roche highlighted the difficulty of implementing AI principles without a strong organisational structure and defined processes.

Integration: Google acknowledged the challenge of integrating AI governance with existing data governance programs, requiring expertise in safety, quality assurance, and responsible AI practices.

Scaling and Adoption: Capgemini emphasised the critical need to operationalise AI governance principles. This involves ensuring adoption across the organisation (with clear roles and accountability), and scaling the program effectively.

Building on Existing Practices:

Roche: Their experience with post-market monitoring for drug safety offered valuable insights. The speaker highlighted the challenge of adapting these processes to software, but also the potential to leverage existing knowledge.

Success Factors for Capgemini:

Stakeholder Engagement: Engaging key stakeholders with clear mandates and budget allocation is crucial.

Integration: Seamless integration with existing governance processes is essential.

Communication: Effective communication with all stakeholders, including owners and business teams, is paramount.

Unique Challenge for Bosch:

This is the first time the EU has regulated a technology in such detail. Bosch highlighted the challenge of incorporating this new framework into their existing governance structure while still addressing the specific needs of AI.

Overall, the panel discussion offered valuable insights into the complexities of establishing and scaling effective AI governance programs in a rapidly evolving technological and regulatory landscape but without giving a clue about the “how” should be deploy such a AI governance framework.

Favourite Talks

The AI Act - A Deep Dive into Regulations and Responsibilities

This talk provided a comprehensive overview of the EU’s recently adopted AI Act. It delved into the intricacies of this landmark legislation, offering valuable insights for those navigating the world of artificial intelligence. This summary unpacks the key points of the Act, explores the varying classifications of AI systems, and clarifies the roles different players occupy within the AI ecosystem.

Harmonisation: A Unified Front for AI

One of the Act’s core objectives is to establish a consistent set of regulations for AI across the European Union. This harmonisation removes discrepancies between national approaches, fostering a unified environment for the development, deployment, and use of AI technologies.

Prohibiting Practices: Not All AI is Created Equal

The Act draws a clear line in the sand, prohibiting certain AI practices deemed too risky. For example, social scoring systems that assign numerical values to individuals based on behaviour and finances are outlawed due to their potential for manipulation and discrimination.

High-Risk Systems: A Closer Look under the Hood

AI systems classified as high-risk, like those used in medical diagnosis, law enforcement, transportation, safety, and other topics such as individual integrity, fundamental right or financial access are subject to stringent regulations. These regulations aim to ensure transparency, accountability, and responsible use of these powerful tools.

Transparency: Demystifying the Black Box

The Act emphasises transparency as a crucial element in fostering trust in AI. Specific rules mandate that users understand how certain AI systems function. This knowledge empowers users to make informed decisions about their interactions with AI-powered products and services.

General Purpose AI: A Balancing Act

The Act recognises the transformative potential of General Purpose AI (GPAI) models, such as large language models. However, it also acknowledges the potential risks associated with these powerful tools. The Act strikes a balance, requiring transparency obligations from providers of GPAI models without imposing the same level of regulation as with high-risk systems.

Market Monitoring and Enforcement: Keeping the AI Landscape Safe

The Act establishes mechanisms for monitoring the AI market and enforcing its provisions. This ensures responsible development and use of AI technologies while safeguarding the interests of individuals and society as a whole.

Innovation: Fuelling the Future with Responsible AI

While establishing regulations, the Act doesn’t stifle innovation. It aims to create a framework that fosters the responsible development and deployment of AI solutions, ultimately propelling the EU forward in the AI age.

A Spectrum of Risk: Classifying AI Systems

The Act categorises AI systems based on their risk profiles, providing a clear understanding of the regulations that apply to each type. Here’s a breakdown of the categories:

- Prohibited: Unacceptable risk (e.g., social scoring systems).

- High-Risk: Requires strict regulations (e.g., medical AI).

- Transparency Risk: Requires transparency about AI use (e.g., chatbots).

- General Purpose: Subject to transparency obligations (e.g., large language models).

- General Purpose AI: A Closer Look at Risk Management

While GPAI models aren’t subject to the same level of regulation as high-risk systems, the Act emphasises responsible development and deployment. Providers must prioritise transparency, explaining the capabilities and limitations of their models. Additionally, for highly capable GPAI models exceeding a specific computing power threshold, providers need to undertake risk assessments to identify and mitigate potential risks like bias and security vulnerabilities. We speak about systemic risk, that are not based on the purpose but rather on the amount of capabilities and computing power put in place to train the model. This amount of power makes the model more impactful and then raises a systemic risk.

Who’s Who in the AI Ecosystem: Roles and Responsibilities

The Act defines various roles within the AI ecosystem, each with distinct responsibilities:

- Provider: Develops or trains the AI model.

- Deployer: Integrates the AI model into a product or service.

- Product Manufacturer (if applicable): Ensures product compliance with the Act (e.g., AI-powered devices).

- Importer/Distributor: Ensures compliance for models imported into the EU.

- Authorized Representative (non-EU providers): Acts as a point of contact for EU authorities.

Uncertainties and Exemptions: Gray Areas and Exclusions

The talk acknowledged the existence of a legal gray area surrounding the customisation and fine-tuning of AI models, impacting the classification of deployer vs. provider. Additionally, the Act’s territorial scope focuses primarily on the AI model itself, regardless of where it’s deployed.

Beyond the Act: Exemptions Worth Knowing

The Act exempts certain applications from its regulations:

- National security, military

- Scientific research and development.

- Personal use of AI systems.

- Free and open-source software (unless prohibited by law or high-risk).

This comprehensive overview provides a deeper understanding of the EU’s AI Act. Remember, it’s crucial to consult official resources like the EU websites for the latest information and authoritative interpretations of the Act. As the AI landscape continues to evolve, the EU’s AI Act

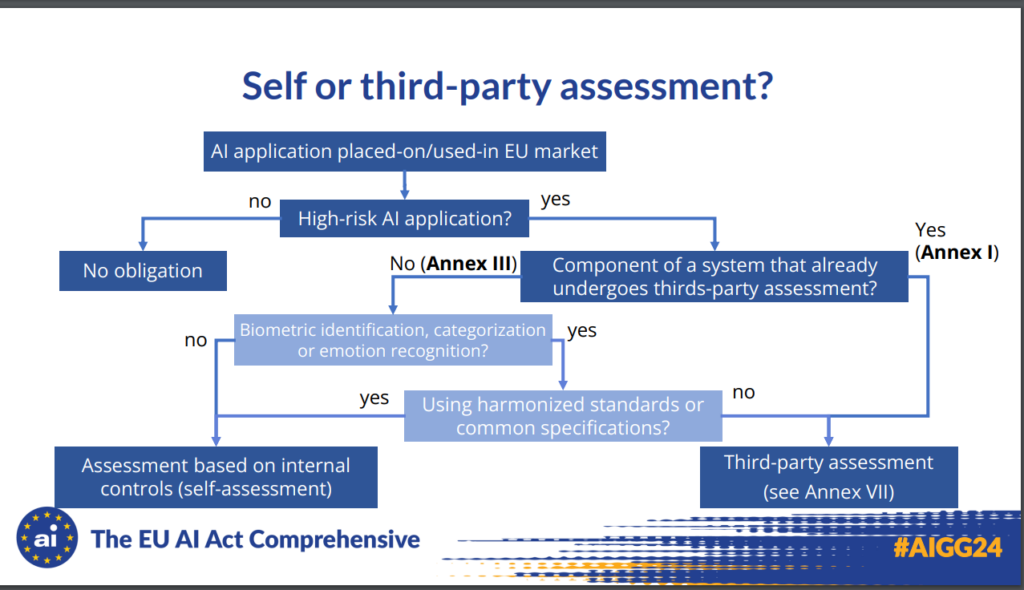

AI Act Conformity Assessment

This session delved into the concept of conformity assessment within the framework of the EU AI Act. Here’s a breakdown of the key takeaways:

What is Conformity Assessment?

Conformity assessment is a crucial process that ensures products, services, processes, systems, or individuals meet the necessary regulations before entering the market. It verifies the safety and compliance of the development process itself.

Two Assessment Routes:

Organisations will face two assessment paths:

- Self-Assessment: Performed by the provider without an external third-party involved.

- Third-Party Assessment: Conducted by an independent notified body approved by national authorities.

The session provided a helpful visual guide to determine which approach is most suitable based on the specific AI system:

Conformity Assessment Measures for Providers:

The focus of conformity assessment lies in the quality of the AI development process and the associated quality management system. Providers are responsible for:

- Verifying their established quality management system adheres to the requirements outlined in Article 17.

- Evaluating the technical documentation to assess the AI system’s compliance with essential requirements for high-risk systems, encompassing data quality, robustness, security, and more.

- Ensuring consistency between the AI system’s design and development process, its post-market monitoring (as detailed in Article 61), and the technical documentation.

Key Information for High-Risk AI Model Registration:

- A detailed description of the intended purpose, components, and functions of the AI system.

- A basic overview of the data (inputs) used by the system and its operational logic.

- The current status of the AI system (in service, no longer in service, recalled).

- EU member states where the system is available.

- A copy of the EU declaration of conformity.

- User instructions (excluding systems in law enforcement, migration, and border control).

- Summaries of both the fundamental rights impact assessment and the data protection impact assessment conducted.

- Information Requirements for Non-High-Risk System

- The system’s trade name and any additional identifiers facilitating identification and traceability.

- A description of the intended purpose.

- A brief explanation for why the model is considered non-high-risk.

- The system’s current status (on the market, no longer in service, recalled).

- EU member states where the system has been placed on the market.

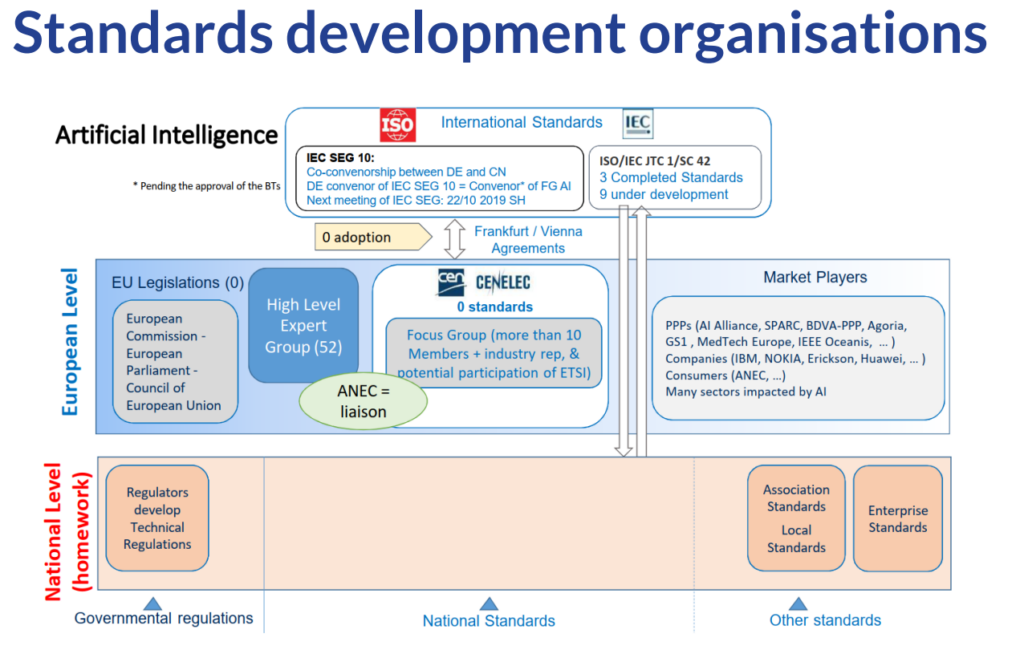

Standardisation of AI Act Documentation

The EU Commission is actively working on standardising the required documentation for the AI Act, although it’s still under development. This standardisation will cover critical areas like:

- Risk management systems

- Data governance and quality of datasets

- Record keeping through logging capabilities

- Transparency and user information provisions

- Human oversight

- Accuracy and robustness specifications

- Cybersecurity specifications

- Quality management systems for AI system providers, including post-market monitoring processes

- Recommended Approach: Implementing Standards